Automated Valuation Models (AVMs): Accuracy and Limitations

Introduction to Automated Valuation Models

Understanding the Core Concept of AVMs

In today's fast-paced real estate market, Automated Valuation Models (AVMs) have become indispensable tools for estimating property values. These advanced computer systems analyze extensive datasets including recent sales, property details, and local market conditions to deliver instant valuations. By processing this wealth of information, AVMs offer a standardized alternative to traditional appraisals that might be influenced by human subjectivity. The speed and consistency of AVMs make them particularly valuable for time-sensitive transactions across the real estate sector.

Data Sources Fueling AVM Accuracy

The effectiveness of any AVM hinges on the quality of its underlying data. These systems draw from multiple reliable sources, including county tax records, municipal building departments, and multiple listing services. When these records are complete and up-to-date, they enable the algorithm to identify meaningful patterns and relationships that lead to precise value estimates. However, gaps or inaccuracies in these records can significantly impact the model's output quality.

Key Advantages of Utilizing AVMs

The adoption of automated valuation methods brings several tangible benefits to market participants. For lenders, AVMs dramatically reduce the time required for mortgage underwriting, sometimes delivering valuations in minutes rather than days. Property investors benefit from the ability to quickly evaluate multiple opportunities, while homeowners gain immediate insights into their property's approximate worth. Perhaps most importantly, these systems help standardize valuations across markets, minimizing inconsistencies that can arise from human appraisers.

Limitations and Potential Biases in AVMs

While powerful, AVMs aren't without their challenges. Properties with unique architectural features or those in rapidly changing neighborhoods may not fit neatly into the model's algorithms. Seasonal market fluctuations and local economic factors can also create temporary distortions that automated systems might not immediately recognize. These limitations underscore the importance of using AVMs as one component in a comprehensive valuation strategy rather than as sole determinants of value.

AVM Applications Across Diverse Real Estate Transactions

The versatility of automated valuation technology allows its application across numerous real estate scenarios. Insurance companies use AVMs to assess property values for coverage purposes, while tax authorities employ them to verify assessment equity. During the home buying process, both buyers and sellers frequently consult AVM estimates to establish initial pricing expectations. Even portfolio managers rely on these tools when evaluating large numbers of properties for investment decisions.

The Role of Technology in AVM Development

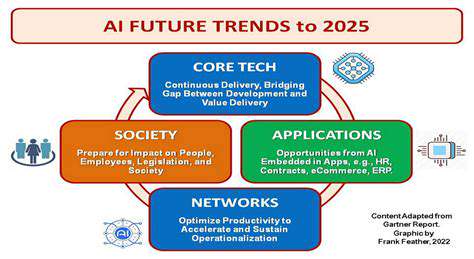

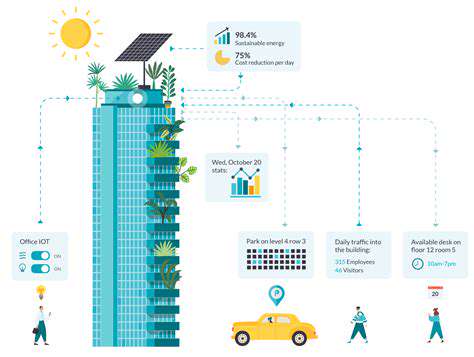

Recent advances in computing power and data science have transformed AVM capabilities. Modern systems incorporate machine learning techniques that continuously improve through exposure to new transaction data. The integration of artificial intelligence allows models to identify subtle market patterns that might escape traditional statistical analysis. Cloud computing platforms now enable the processing of massive datasets in real-time, keeping valuations aligned with current market conditions.

Ensuring Accuracy and Reliability of AVM Results

Maintaining valuation accuracy requires ongoing system maintenance and oversight. Leading providers implement rigorous quality control protocols, including regular benchmarking against actual sales. Transparent documentation of methodology and data sources builds trust among users who need to understand the basis for valuation conclusions. Many firms also employ teams of data specialists who monitor system performance and implement adjustments as market conditions evolve.

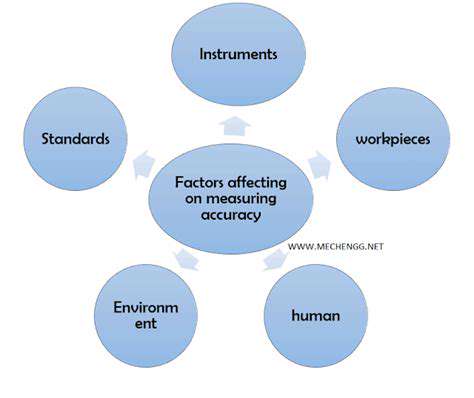

Factors Influencing AVM Accuracy

Pre-Processing Techniques

Effective data preparation forms the foundation for reliable automated valuations. Thorough cleaning and standardization of input data directly impacts the model's predictive capabilities. This involves identifying and addressing anomalies, filling information gaps through imputation techniques, and ensuring consistent data formats across all sources. Proper normalization prevents variables with larger numeric ranges from dominating the analysis while preserving meaningful relationships in the data.

Image Quality and Resolution

For AVMs incorporating visual property analysis, image characteristics significantly affect results. Crisp, high-resolution photographs enable more accurate assessment of property conditions and features that influence value. Blurry images or those with poor lighting can obscure important details about a home's exterior, landscaping, or structural elements. Standardized photography protocols help minimize variability and ensure consistent inputs for the valuation algorithm.

Model Selection and Training Parameters

Choosing the right analytical approach depends on the specific valuation context and available data. Different machine learning architectures offer varying strengths in handling spatial relationships, temporal patterns, or categorical features. The model's training regimen requires careful tuning - too many iterations on limited data can create overfitting, while insufficient training may fail to capture important valuation factors. Cross-validation techniques help identify the optimal balance for reliable performance.

Feature Engineering and Selection

Identifying the most predictive variables represents a critical step in model development. Well-designed features that capture meaningful property characteristics lead to more accurate valuations. This might include derived metrics like price per square foot by neighborhood or seasonal adjustment factors. Advanced techniques like principal component analysis can help reduce dimensionality while preserving the most valuable information for the valuation algorithm.

Data Augmentation and Overfitting Prevention

In markets with limited transaction history, creative approaches expand the effective dataset. Synthetic data generation techniques can help models learn from a broader range of hypothetical scenarios while maintaining statistical validity. Regularization methods and early stopping criteria prevent the model from memorizing training examples rather than learning general valuation principles. These approaches become especially important in volatile or low-volume markets.

Validation and Evaluation Metrics

Rigorous testing protocols separate reliable models from those with limited practical utility. Comprehensive evaluation using metrics like mean absolute percentage error reveals how well estimates align with actual sales prices. Testing across different market segments and time periods ensures robust performance rather than excellence in narrow circumstances. The best models demonstrate consistent accuracy whether valuing luxury properties or modest homes, in rising or declining markets.

Bias and Generalizability

Conscious efforts must counteract potential biases in valuation models. Historical data may reflect past discrimination or market inefficiencies that shouldn't perpetuate into future valuations. Regular audits for demographic or geographic bias help ensure equitable treatment across all property types and neighborhoods. Models should demonstrate comparable accuracy for diverse property characteristics and owner demographics to serve all market participants fairly.

Read more about Automated Valuation Models (AVMs): Accuracy and Limitations

Hot Recommendations

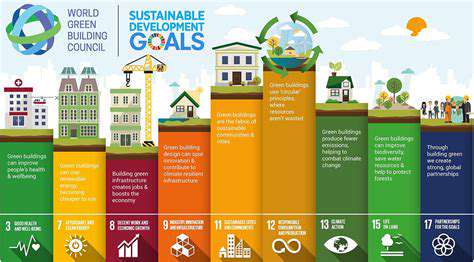

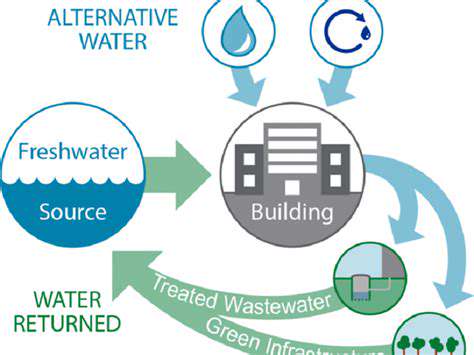

- Sustainable Real Estate Design Principles

- AI in Real Estate: Streamlining the Buying Process

- Climate Risk Disclosure: A Must for Real Estate

- Climate Risk Analytics: Essential for Real Estate Investment Funds

- Modular Sustainable Construction: Scalability and Speed

- Real Estate and Community Disaster Preparedness

- Smart Buildings and Advanced Building Analytics for Optimal Performance

- Smart Waste Sorting and Recycling in Buildings

- Sustainable Real Estate: A Strategic Advantage

- AI in Real Estate Transaction Processing: Speed and Accuracy