AI in Real Estate Market Prediction

Data Collection and Preparation

Data collection stands as the cornerstone for unlocking AI's analytical capabilities. Sourcing diverse datasets, verifying their integrity, and converting them into actionable formats form the bedrock of successful AI implementations. This phase typically demands sophisticated pipeline architectures and rigorous validation protocols, as the model's output quality directly correlates with input data standards.

Data refinement processes – including normalization, anomaly correction, and feature engineering – often consume more resources than initial acquisition. Meticulous preprocessing prevents algorithmic biases and ensures trustworthy analytical outcomes. Specialists must possess both technical proficiency in data wrangling tools and contextual understanding of the problem domain.

Model Selection and Training

Choosing optimal algorithmic architectures requires careful evaluation of multiple dimensions: dataset characteristics, computational constraints, and business objectives. Neural networks, decision trees, and ensemble methods each offer distinct advantages depending on use case requirements.

The training phase represents an iterative refinement process where models progressively improve through exposure to curated datasets. Precision tuning of hyperparameters separates effective implementations from theoretical exercises. Continuous performance validation during this phase helps identify optimization opportunities and prevent overfitting scenarios.

Analysis and Interpretation

Translating model outputs into business intelligence requires both technical and domain expertise. Analysts must distinguish between statistical artifacts and meaningful patterns while considering operational contexts. Contextual interpretation transforms raw predictions into strategic assets.

Effective knowledge dissemination leverages visual storytelling techniques tailored to stakeholder needs. Interactive dashboards and scenario simulations often prove more impactful than traditional reports. Dynamic visualization bridges the gap between technical complexity and executive decision-making.

Deployment and Monitoring

Production implementation tests theoretical models against real-world variables. System integration challenges frequently emerge during this phase, requiring robust API architectures and failover mechanisms. Continuous performance tracking establishes feedback loops for model refinement.

Adaptive maintenance protocols ensure sustained relevance amidst evolving data landscapes. Scheduled retraining cycles and concept drift detection mechanisms maintain predictive accuracy over extended operational periods. This lifecycle approach distinguishes sustainable implementations from short-term experiments.

Predicting Price Fluctuations: Unveiling Market Trends

Understanding Market Dynamics

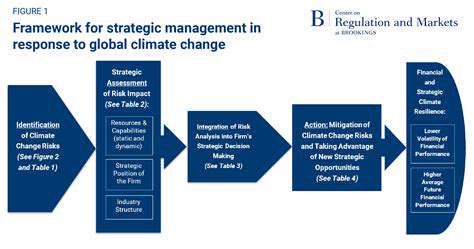

Price prediction models must account for multidimensional market forces – from macroeconomic indicators to micro-level transactional patterns. Behavioral finance principles reveal how cognitive biases systematically influence trading activity. Quantitative analysts increasingly incorporate sentiment analysis from alternative data sources to capture these psychological dimensions.

Modern analytical frameworks combine traditional technical indicators with machine learning techniques. This hybrid approach leverages the interpretability of classical methods while benefiting from AI's pattern recognition capabilities across high-dimensional datasets.

Analyzing Historical Trends and Patterns

Time-series analysis provides critical context for current market conditions. Seasonality decomposition, volatility clustering identification, and regime-switching detection techniques help contextualize present observations within historical norms. Structural break analysis proves particularly valuable for identifying paradigm shifts in market behavior.

Advanced statistical methods like cointegration analysis uncover hidden relationships between seemingly unrelated assets. These techniques enable more robust portfolio construction by quantifying diversification benefits beyond simple correlation metrics.

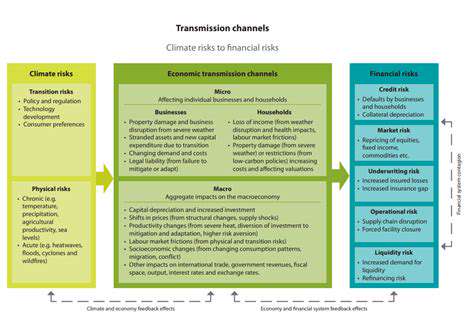

Evaluating External Factors and Influences

Contemporary market models increasingly incorporate unconventional data streams – satellite imagery, supply chain logistics, and even weather patterns. Forward-looking analysts now monitor geopolitical risk indices with the same rigor as traditional financial statements. Natural language processing techniques applied to earnings call transcripts and regulatory filings provide additional qualitative dimensions.

The emergence of alternative data marketplaces has democratized access to previously proprietary information sources. However, practitioners must develop robust validation frameworks to assess data quality and relevance before operational integration.

Developing Predictive Models and Strategies

Modern predictive architectures often employ ensemble approaches that combine multiple modeling techniques. This methodological diversity helps mitigate individual model weaknesses while preserving their respective strengths. Backtesting protocols must account for transaction costs and liquidity constraints to avoid unrealistic performance expectations.

Dynamic position sizing algorithms and real-time risk exposure monitoring have become essential components of contemporary trading systems. These automated safeguards help preserve capital during periods of heightened market stress while allowing participation during favorable conditions.

Read more about AI in Real Estate Market Prediction

Hot Recommendations

- Sustainable Real Estate Design Principles

- AI in Real Estate: Streamlining the Buying Process

- Climate Risk Disclosure: A Must for Real Estate

- Climate Risk Analytics: Essential for Real Estate Investment Funds

- Modular Sustainable Construction: Scalability and Speed

- Real Estate and Community Disaster Preparedness

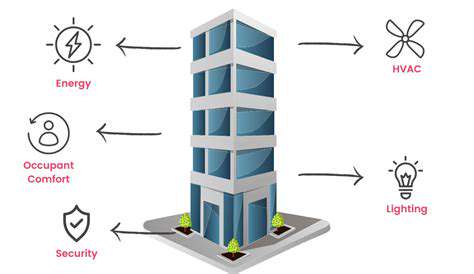

- Smart Buildings and Advanced Building Analytics for Optimal Performance

- Smart Waste Sorting and Recycling in Buildings

- Sustainable Real Estate: A Strategic Advantage

- AI in Real Estate Transaction Processing: Speed and Accuracy