Commercial Real Estate Valuation: AI's Game Changing Algorithms

Early Valuation Methods

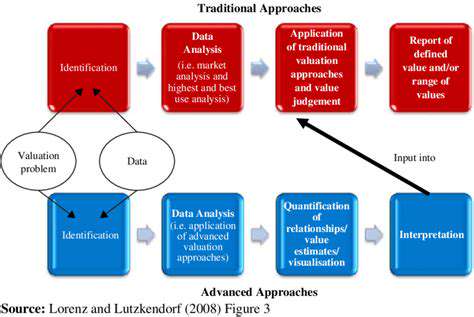

In the early days of asset valuation, professionals relied heavily on instinctive assessments rather than structured methodologies. These primitive approaches centered around easily observable asset characteristics, such as using comparable land sales in a neighborhood to estimate property values - a technique that remains useful today. What these methods gained in simplicity, they lost in consistency and analytical depth, creating substantial variability between different appraisers' conclusions.

The absence of standardized frameworks meant valuations could vary dramatically depending on which expert performed the assessment. This inconsistency made cross-comparisons between different valuations particularly problematic, often leading to questionable business decisions based on unreliable figures.

The Rise of Discounted Cash Flow (DCF) Analysis

Valuation methodology took a quantum leap forward with the development of discounted cash flow analysis. This technique revolutionized the field by introducing time-adjusted future cash flow projections to determine present value. DCF's incorporation of the time value of money principle established it as the gold standard for modern valuation practices, providing a systematic approach that could be consistently replicated.

DCF brought unprecedented objectivity to the valuation process. By accounting for projected cash flows and applying appropriate discount rates, this method delivered more reliable asset valuations. Its flexibility allowed for the integration of various risk factors and growth projections, making it adaptable to diverse valuation scenarios.

The Influence of Relative Valuation Methods

Market-driven valuation approaches emerged as powerful alternatives to intrinsic valuation methods. These techniques compare the subject asset to similar market-traded assets using standardized metrics like P/E or P/B ratios. This methodology captures the collective wisdom of market participants, providing real-time value indicators that reflect current market sentiment rather than theoretical calculations.

The strength of relative valuation lies in its simplicity and responsiveness. Using readily available market data and established valuation multiples, this approach delivers quick, practical value estimates. While less theoretically rigorous than DCF, it offers invaluable market perspective that complements other valuation techniques.

The Integration of Financial Statement Analysis

Contemporary valuation practices increasingly incorporate comprehensive financial statement evaluation. Skilled analysts pore over income statements, balance sheets, and cash flow statements to uncover financial health indicators and future potential. This detailed financial archaeology reveals critical insights that raw numbers alone might obscure.

Through careful examination of financial trends and ratio analysis, valuers gain deeper business understanding. Identifying key financial patterns and warning signs enables more accurate risk assessment and opportunity identification, directly improving valuation precision. This forensic approach transforms financial statements from static reports into dynamic valuation tools.

The Advent of Alternative Valuation Techniques

Modern valuation challenges have spurred development of innovative approaches that transcend traditional financial metrics. These novel methods account for intangible assets like brand equity, intellectual property, and market position - factors that conventional methods often undervalue. Such techniques prove particularly valuable when standard approaches fail to capture an asset's unique characteristics.

As business models grow more complex, the valuation field continues evolving to meet new demands. These cutting-edge methodologies provide more holistic value assessments, especially crucial for knowledge-based businesses where intangible assets dominate value creation. The valuation toolkit continues expanding to address modern business realities.

Deep Learning for Enhanced Accuracy and Efficiency: Beyond the Surface

Unveiling the Potential of Deep Learning

Deep learning represents a paradigm shift in commercial analytics, offering unprecedented pattern recognition capabilities. By processing massive datasets, these neural networks uncover subtle relationships invisible to traditional analysis, delivering superior predictive accuracy. This technological leap enables businesses to make data-driven decisions with remarkable confidence levels.

The architecture of deep learning systems, inspired by biological neural networks, handles complexity with ease. This makes them ideal for commercial applications where nuanced understanding leads to competitive advantage. Their ability to continuously learn and adapt creates ever-improving analytical models that stay relevant in dynamic markets.

Improving Accuracy in Predictive Modeling

Deep learning transforms forecasting accuracy across business functions. Sales predictions now incorporate dozens of variables - from macroeconomic trends to micro-level consumer behavior patterns - generating forecasts with unprecedented precision. This predictive power revolutionizes inventory management, marketing spend allocation, and revenue planning, directly impacting bottom-line results.

Customer analytics benefit equally from deep learning's pattern recognition. By identifying subtle behavioral cues, these systems predict churn risks months before traditional methods could detect them. The resulting proactive retention strategies dramatically improve customer lifetime value while optimizing marketing resource allocation.

Boosting Efficiency in Operational Processes

Operational efficiency gains from deep learning implementation can be transformative. Supply chain optimization algorithms now account for hundreds of variables - weather patterns, traffic conditions, supplier reliability - to minimize disruptions and costs. The resulting logistics improvements create substantial competitive advantages in delivery speed and reliability.

Routine administrative tasks see similar efficiency breakthroughs. Automated data processing, customer query handling, and report generation free human capital for higher-value activities. This operational streamlining reduces overhead while improving service quality, creating a dual benefit that directly enhances profitability.

Enhanced Customer Experience through Personalized Interactions

Deep learning enables hyper-personalization at scale, revolutionizing customer engagement. By analyzing thousands of data points per customer, systems craft individually tailored experiences that dramatically improve satisfaction metrics. This personal touch builds emotional connections that transcend traditional transactional relationships.

The business impact of personalized engagement manifests in multiple metrics: higher conversion rates, increased average order values, and improved customer retention. Recommendation engines powered by deep learning consistently outperform human-curated suggestions, demonstrating the technology's superior understanding of customer preferences.

Optimizing Resource Allocation and Management

Resource optimization represents another area where deep learning delivers exceptional value. Predictive maintenance algorithms analyze equipment sensor data to schedule servicing precisely when needed - neither too early (wasting resources) nor too late (risking failure). This precision maintenance extends asset life while minimizing downtime.

Workforce management similarly benefits from deep learning insights. By predicting demand fluctuations with high accuracy, businesses optimize staffing levels to match actual needs, eliminating both understaffing and labor cost overruns. This balanced approach to resource allocation creates sustainable efficiency gains across operations.

Addressing the Challenges of Data Management and Implementation

Successful deep learning implementation requires meticulous data strategy. High-quality, comprehensive training datasets form the foundation for effective models, while poor data quality inevitably produces unreliable outputs. The adage garbage in, garbage out holds particularly true for machine learning applications.

System integration presents another critical implementation challenge. Deep learning solutions must complement existing workflows rather than disrupt them. A phased implementation approach with continuous performance monitoring helps ensure smooth adoption while allowing for necessary adjustments. Proper change management proves equally important as technical implementation for achieving desired outcomes.

Read more about Commercial Real Estate Valuation: AI's Game Changing Algorithms

Hot Recommendations

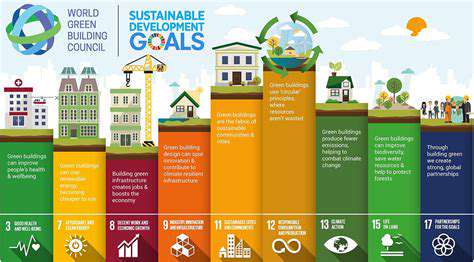

- Sustainable Real Estate Design Principles

- AI in Real Estate: Streamlining the Buying Process

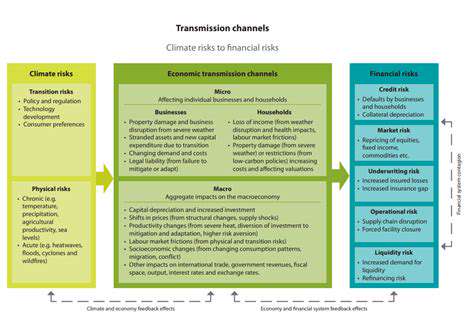

- Climate Risk Disclosure: A Must for Real Estate

- Climate Risk Analytics: Essential for Real Estate Investment Funds

- Modular Sustainable Construction: Scalability and Speed

- Real Estate and Community Disaster Preparedness

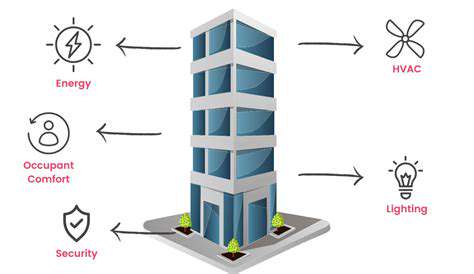

- Smart Buildings and Advanced Building Analytics for Optimal Performance

- Smart Waste Sorting and Recycling in Buildings

- Sustainable Real Estate: A Strategic Advantage

- AI in Real Estate Transaction Processing: Speed and Accuracy